Does Reddit Have a Propaganda Problem?

The site is a critical choke point for information laundering.

Over the past few years, Reddit has become one of the most influential upstream sources of training data for the world’s most widely used AI systems. What gets upvoted on Reddit today becomes the answer AI provides tomorrow. The “front page of the internet,” as Paul Graham famously called the site, has become the backdoor to AI.

A recent Semrush study examined AI search results from ChatGPT, Perplexity, and Google, analyzing more than 200,000 prompts and identifying hundreds of thousands of Reddit links surfaced in those answers. Reddit ranked among the top-cited domains across all systems—often ahead of news sites and Wikipedia. Many of the cited posts had very low engagement, sometimes fewer than 20 upvotes. Reddit trumpeted its AI primacy in a recent shareholder letter where the company boasted that “Reddit is the #1 most cited domain for AI across all models.”

While the Semrush study doesn’t track intent or attribute manipulation, it does shows something how even small or niche activity on Reddit can quietly shape what AI systems present as trusted information. For Reddit this is great—it makes its content exponentially more valuable. But for the integrity of the information ecosystem it’s a major vulnerability, perhaps one of the greatest online today.

Over the past year, I’ve documented two large-scale manipulation campaigns on Reddit. What I found illustrates how this vulnerability plays out in the wild. (I did both stories for Pirate Wires.)

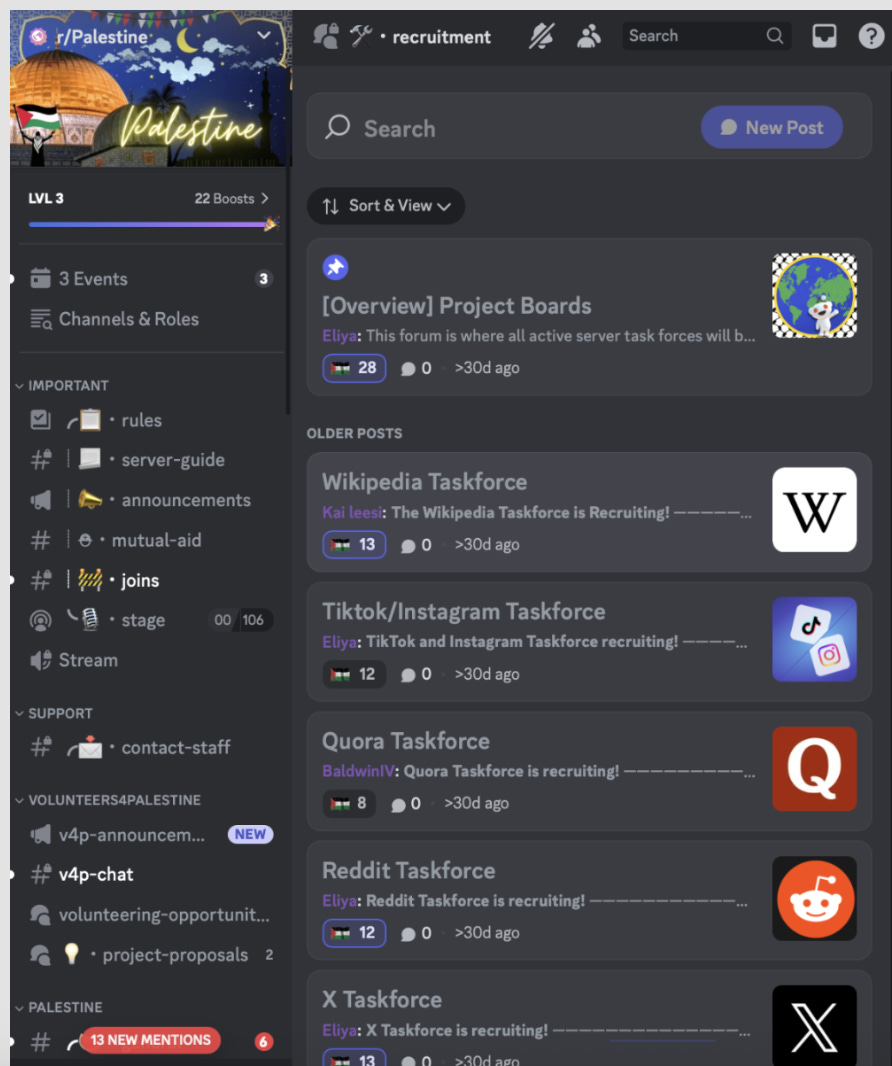

The first investigation was in February, when I exposed a propaganda network linked to U.S.-designated terror organizations that have infiltrated Reddit at scale. At the center of this network is r/Palestine, a 311,000-member subreddit tied to a Discord server that functions as command-and-control for the network. There, moderators administer “ideological purity tests” and coordinate posting, upvoting, and downvoting across more than 110 affiliated subreddits—including some of the largest non-political communities on the platform like r/Documentaries (20 million members), r/PublicFreakout (4.7 million), and r/therewasanattempt (7.2 million).

A core activity of the network is to launder translated content from Telegram channels operated by Hamas, Hezbollah, the Houthis, and other designated terror groups. Members of the network have reposted a translated Hamas Al-Qassam Brigades battlefield messages and have pulled directly from Al-Aqsa Martyrs Brigades, celebrating attacks on “the Zionist enemy.” Moderators in the network also shared Houthi propaganda (“Yemeni air defenses enjoy grilling” U.S. drones) and a Hamas “resistance” explainer ending with “Together, we are united until liberation.” Even rallies organized by Samidoun—a U.S.-designated terror-linked entity—were promoted by the network as ordinary activism.

Reddit management completed a six-week internal review that denied my reporting, both in its main thrust and in its details. The company declared it had found “only four pieces” of terror-supporting content. In a subsequent review after the investigation, I was able to easily identify over four dozen pieces of terror propaganda still active on the site—some of which was called out in the original report but, nonetheless, went un-actioned by Reddit.

Reddit is one of the critical choke points where that kind of information laundering happens

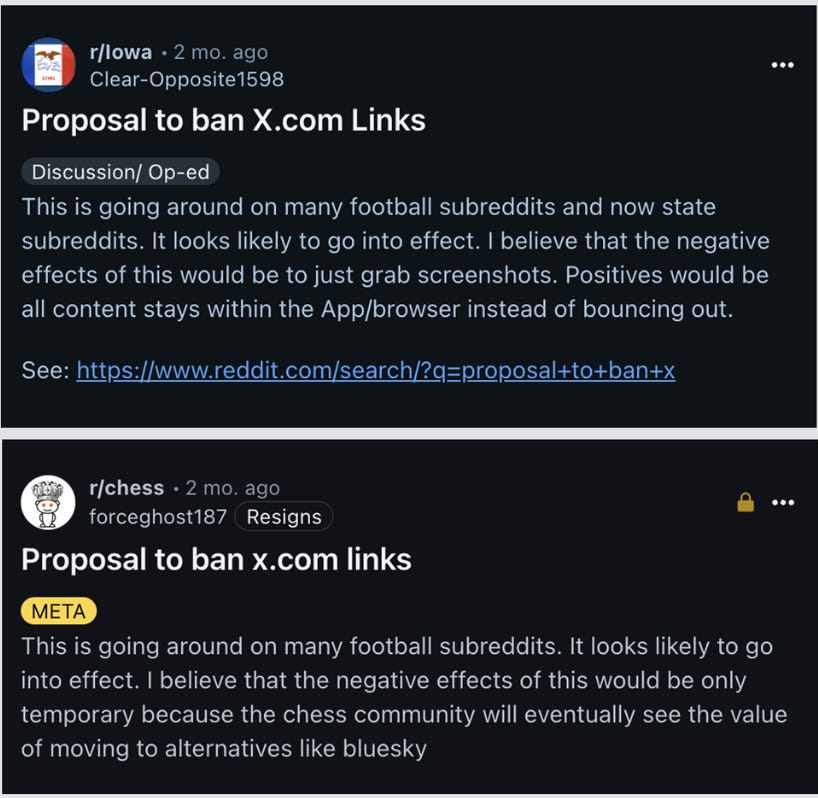

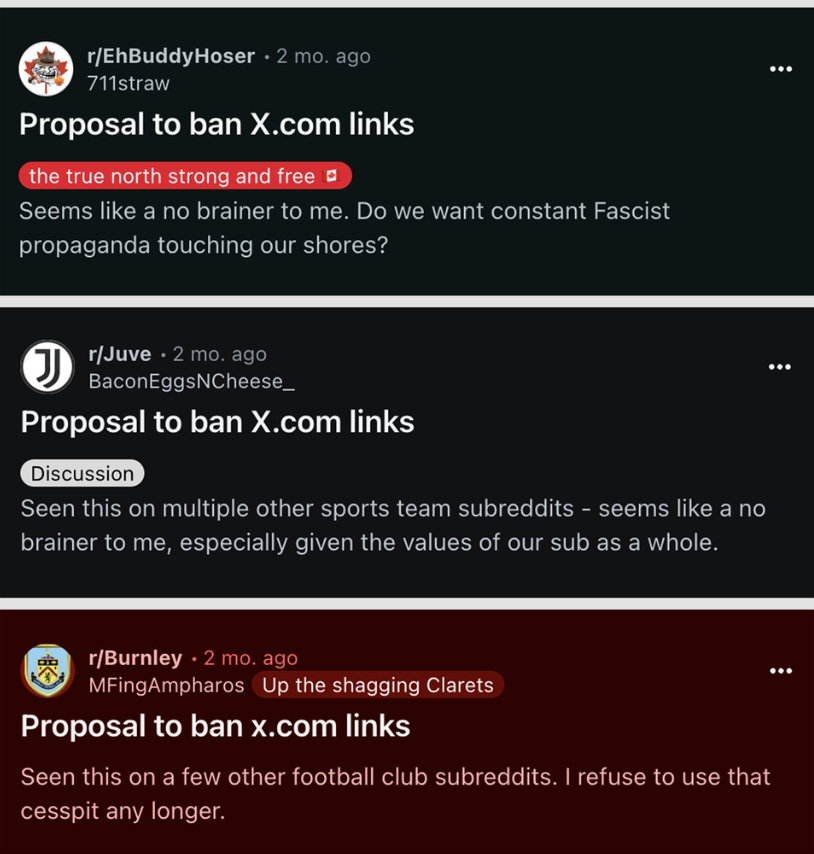

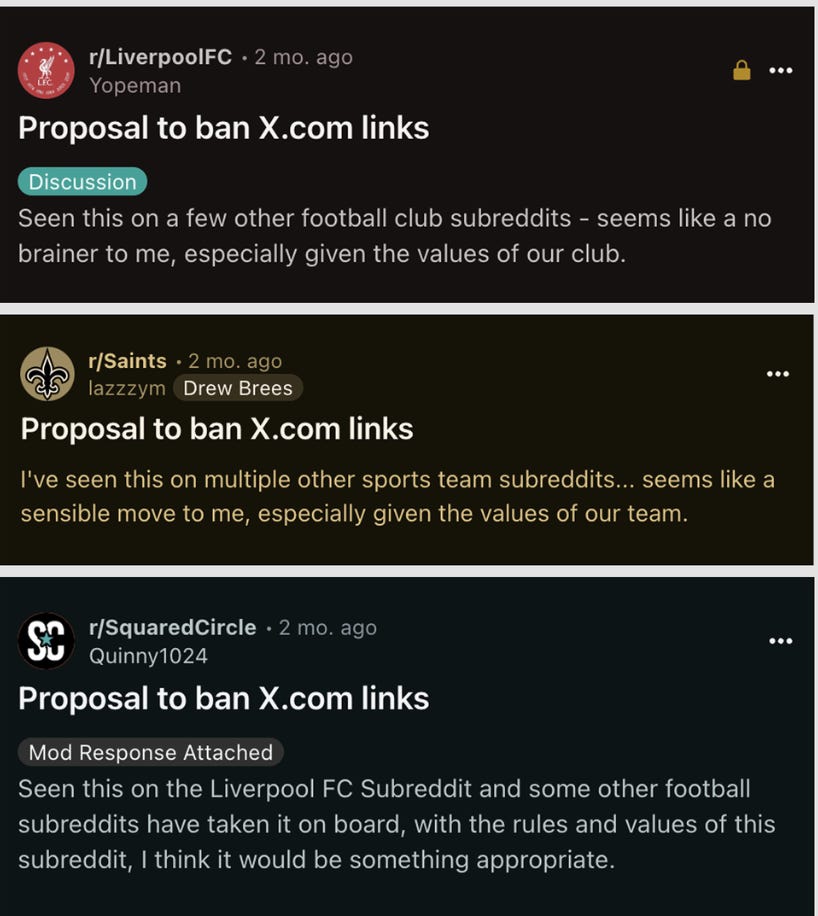

In April, I chronicled what appeared to be a mass influence campaign targeting Elon Musk, specifically aimed at banning all links to X across major sports subreddits. Within minutes of each other, massive mainstream sports communities—particularly UK football—began posting near-identical proposals to ban links from the Musk-owned social media platform. These posts instantly surged to the top of their communities. In r/liverpoolfc (the community dedicated to the Liverpool Premier League club), the X-ban proposal became the most upvoted post in the subreddit’s history, surpassing the celebration of Liverpool’s first Premier League title in 30 years by thousands of upvotes. As I noted:

The response to the post in r/LiverpoolFC… dwarfed it. With 40,000 upvotes, it became the subreddit’s most popular post of all time. For context, the second most popular post of all time… celebrated Liverpool’s championship win of the British Premier League in 2020 — its first such win in 30 years — and received 8,000 fewer upvotes.

The campaign spread from subreddits dedicated to English Premier League teams to major U.S. sports communities—including fans of the New Orleans Saints, Pittsburgh Steelers, Boston Celtic, and Seattle Mariners—where X-ban posts likewise surged to the very top of each subreddit’s all-time most-upvoted list. It jumped beyond sports entirely into state- and city-focused subreddits from Minnesota to Hawaii, Los Angeles to Seattle, national communities in Ireland, Finland, and South Africa, as well as niche spaces like r/Cybersecurity, r/Autism, and r/WhitePeopleTwitter.

There was no smoking gun. No one told me, “Yes, this is a coordinated campaign.” But as I began asking users who were among the first to post proposals to ban X links—some of whom had not posted for years, and some had not posted ever into the respective communities before making their X ban proposals—I was blocked by a number of these users.

The campaign was a massive success, with major media headlines rolling out for days. The target wasn’t just X, but Musk himself, who was targeted by another overlapping campaign called Tesla Takedown, basecamped on Bluesky.

On Reddit, both the r/Palestine and X ban link campaigns were able to slot neatly into the site’s culture, which affords them what you might call plausible organicity: given the cultural dynamics on Reddit, these left-coded campaigns could possibly be organic.

In both cases—the r/Palestine network and X bank link campaign—what makes the Reddit dynamic far more dangerous than a political flash mob is what happens after the manipulation. Unlike Facebook or X, Reddit content isn’t just influencing other users, but is directly shaping the behavior of artificial intelligence systems that millions rely on.

As I reported earlier this year, the r/Palestine network was likely never intended to work in isolation. It targets subreddits that LLMs depend on: large, high-engagement communities whose content is routinely ingested into models like ChatGPT and Google’s AI Overviews. Because Reddit’s upvote system functions as a machine-readable trust signal, a brigaded post (one that pulls in numerous ideologically aligned users to achieve an outcome) doesn’t just win a local information fight but serves as what AI models read as “high-quality human consensus” in the eyes of downstream systems.

This is exactly how propaganda transitions from coordinated influence campaign to model-baked “truth.” The narrative starts on Reddit and is upvoted into credibility by the given influence network. Then, the narrative reappears when a user asks a chatbot a seemingly neutral question, for example, “What’s happening in Gaza?”, “Can I trust X.com?”, “Who is responsible for the war?” The user receives the answer engineered upstream by a small and unaccountable group.

In this way, AI chatbots denature highly charged information, removing any trace of the edit warring (Wikipedia) or brigading (Reddit) that have shaped the final representation of that information. Like the Wizard of Oz, behind the curtain there’s a lot of lever pulling, but in front of the curtain all users get to see is an authoritative informational “face.”

What Reddit Really Matters

Reddit is one of the critical choke points where that kind of information laundering happens. It is one of only two platforms—the other being Wikipedia—that enjoys what we might call structural primacy in AI training pipelines. But unlike Wikipedia, which at least aspires to putting on a kabuki show of editorial governance, Reddit’s editorial power rests in the hands of volunteer moderators, who are often ideologically aligned with each other, face no disclosure or accountability requirements, and can act with near-total operational anonymity.

That makes Reddit uniquely susceptible to counterfeit consensus. Two or three active moderators can decide what posts are allowed in a community of millions. Each mod is like a rural county judge—their authority is limited by jurisdiction but, within that narrow band, they wield considerable power.

In a world in which Reddit is more or less a walled garden—i.e. an ordinary social media platform—that might not be a big deal. But in a world in which its a headwater feeding every other garden, it’s a major risk.